Your shopping cart is empty!

Application Scenarios | Hyperspectral Facial Automated Recognition

This article introduces the application of hyperspectral in face recognition.

Hyperspectral Facial Automated Recognition

1、Preface

Facial recognition has been widely applied in various fields, such as criminal investigation and access control systems, due to its stability and the fact that it does not require cooperation from the subject. However, the challenge lies in the fact that facial images are highly susceptible to factors like lighting and angle, which can significantly impact the recognition process. The ability to develop an appropriate facial recognition method or design a system capable of effectively performing facial recognition has become crucial for the successful application of this technology in various domains.The Pearson Discriminative Network's facial automated recognition method utilizes the twin convolutional neural network to reduce noise interference and prevent overfitting. Additionally, it integrates a multi-task cascaded convolutional neural network in the facenet frontend, incorporating the Pearson correlation coefficient discrimination module for effective identification of deep features in the target face. Both methods can achieve automated facial recognition, primarily through the collection of RGB images of the target face. However, the recognition results are not ideal.

To address this, this paper proposes a hyperspectral facial automated recognition system based on artificial intelligence technology, developed by Optosky, aiming to better meet practical needs and enhance its application in various fields, including identity verificationand personalized marketing. The system is designed with a modular approach, featuring an embedded system architecture that collects and preprocesses hyperspectral facial images. The preprocessed image data is stored in RAM memory. The facial detection module retrieves data from the RAM, loads the Haar face classifier, and performs facial region detection and extraction. Subsequently, the facial feature extraction and recognition module utilizes LBP feature extraction and the construction and training of the LeNet-5 convolutional neural network facial recognition model to output facial recognition results.

2. Technical Approach and Main Content

Application of Embedded Hyperspectral Facial Automated Recognition System Based on Artificial Intelligence Technology

2.1.Technical Approach

Based on the Hi3531 processor, which has a maximum data processing frequency of 930 MHz, this ensures that the hyperspectral facial automated recognition system achieves strong overall performance in facial recognition and better meets the real-time demands of the system. In practical operations, users issue commands through the human-machine interaction module to activate the image acquisition and processing module. This module collects and preprocesses hyperspectral facial images and stores the acquired hyperspectral facial images in RAM memory. The facial detection module then uses the preprocessed hyperspectral facial image data to perform facial detection. After facial detection is complete, the facial feature extraction and recognition module extracts facial features from the hyperspectral images and completes the facial recognition process.

2.2.Automated Recognition Technical Approach

2.2.1Acquisition and Preprocessing

In the system described in this paper, hyperspectral facial image acquisition is achieved using a hyperspectral camera with a USB interface in the image acquisition module. The preprocessing of hyperspectral facial images focuses on image denoising and contrast enhancement to improve the clarity of the acquired hyperspectral facial images. The specific denoising process for hyperspectral facial images is as follows:

(1). Median Filtering: Apply a median filter with a window size of 3×3 to the acquired hyperspectral facial images.

(2). Wavelet Decomposition: Perform a wavelet decomposition with 3 levels using the conf4 wavelet basis on the hyperspectral facial images obtained from step (1). Use the resulting scales and wavelet coefficients to construct the coefficient vector \( H \).

(3). Thresholding: Apply thresholding to \( H \). In this paper, a soft thresholding function is used, which can be described by the following formula:

In the formula: The general threshold is denoted by λ\lambdaλ; the thresholded HHH is denoted by H′H'H′.

Wavelet Reconstruction: Utilize the new coefficient vector obtained from step (3) to perform wavelet reconstruction, completing the denoising of the hyperspectral facial images. Contrast enhancement of the hyperspectral facial images focuses on enhancing the high-frequency components. In this paper, local contrast enhancement is performed on a 3×3 pixel area of the original hyperspectral facial images. This enhancement is implemented using a local statistical method by the image preprocessing module, and the enhancement process can be described as follows:

f(i,j)=h′(i,j)×(1-g)+g×h(i,j)(2)

In the formula: The luminance values of the high-frequency components of the input and output hyperspectral facial images are denoted by \( h(i, j) \) and \( f(i, j) \), respectively; the gain factor and the local average value are denoted by \( g \) and \( h'(i, j) \). The gain factor \( g \) should not be set too low, as a very small value can easily lead to image blurring, which undermines the purpose of image enhancement.The initial purpose of the operation, combined with past experience, is to ensure that \( g \) typically falls within the range of g∈[0.75,1.05].

2.2.2.Facial Region Detection and Extraction Process

In this study, facial detection is achieved by loading the Haar face classifier. The specific facial detection process is illustrated in Figure 1.

Figure 1: Facial Detection Process

In this paper, the LBP (Local Binary Patterns) algorithm is used in the facial feature extraction and recognition module to perform feature extraction on the facial regions obtained from the facial detection operation. The specific feature extraction process is as follows:

(1). Image Partitioning: Divide the facial region image into 256 blocks, each with a size of 16×16 pixels.

(2). LBP Calculation: Select a 3×3 window and compare the grayscale value of the central pixel with the grayscale values of the surrounding 8 pixels. If the grayscale value of a surrounding pixel is less than the central pixel's value, assign a value of 0 to that pixel; otherwise, assign a value of 1.

(3). Binary Sequence Conversion: Convert the binary sequence obtained from steps (1) and (2) into a decimal form and perform frequency analysis on the numbers occurring within each block.

After completing the above image feature extraction operations, a LeNet-5 convolutional neural network model, consisting of 1 input layer, 2 convolutional layers, 2 pooling layers, 1 fully connected layer, and 1 output layer, is constructed in the facial feature extraction and recognition module to complete the hyperspectral facial automated recognition task.

To effectively prevent gradient vanishing issues during model training, this paper proposes the use of the Rectified Linear Unit (ReLU) function as an activation function, replacing the Sigmoid function. This can be described as follows:

(1). Image Partitioning: Divide the facial region image into 256 blocks, each with a size of 16×16 pixels.

(2). LBP Calculation: Select a 3×3 window and compare the grayscale value of the central pixel with the grayscale values of the surrounding 8 pixels. If the grayscale value of a surrounding pixel is less than the central pixel's value, assign a value of 0 to that pixel; otherwise, assign a value of 1.

(3). Binary Sequence Conversion: Convert the binary sequence obtained from steps (1) and (2) into a decimal form and perform frequency analysis on the numbers occurring within each block.

After completing the above image feature extraction operations, a LeNet-5 convolutional neural network model, consisting of 1 input layer, 2 convolutional layers, 2 pooling layers, 1 fully connected layer, and 1 output layer, is constructed in the facial feature extraction and recognition module to complete the hyperspectral facial automated recognition task.

To effectively prevent gradient vanishing issues during model training, this paper proposes the use of the Rectified Linear Unit (ReLU) function as an activation function, replacing the Sigmoid function. This can be described as follows:

By using the new activation function and performing continuous model training operations, it is possible to achieve more desirable results in hyperspectral facial automated recognition.

3. Solution Design

3.1. Facial Data Acquisition

Twenty on-site test subjects were randomly selected by the staff. From this facial database, 800 hyperspectral facial image samples were randomly chosen and mixed with the hyperspectral facial image samples of the on-site test subjects, creating the hyperspectral facial image sample database used in this paper.

3.2. Application Background

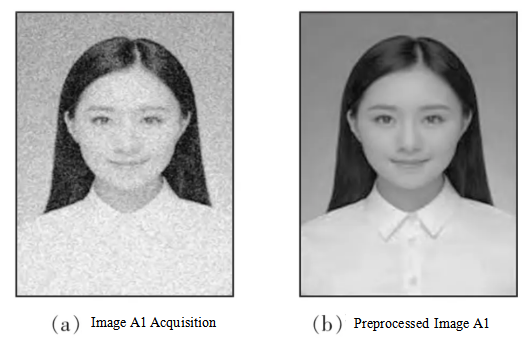

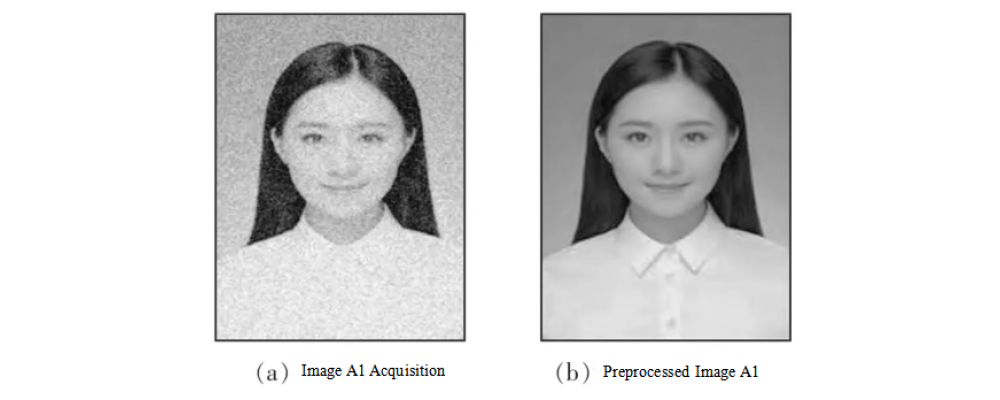

Hyperspectral devices were used to collect and preprocess hyperspectral facial images of on-site testers A1 to A20. The obtained hyperspectral images, along with their preprocessing results, are prepared for subsequent machine learning training.

3.3.Specific Implementation Process

3.3.1.Preparation Stage

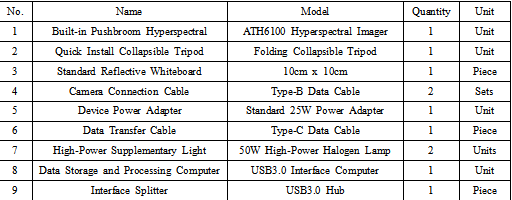

(1). Initial Setup: Configure the equipment to meet project requirements and prepare the equipment list.

Table 2-1: Built-in Pushbroom Hyperspectral Configuration List

After the instruments are calibrated at the company, check the equipment according to the configuration list to ensure that nothing is missing.(3)Set up the tripod on-site, secure the built-in pushbroom portable hyperspectral imager ATH6100 to the tripod, ensuring it is firmly fixed and able to rotate. Connect two Type-B data cables, one Type-C data cable, and the power cable.

3.3.2.Data Acquisition Stage

Hyperspectral images were acquired as shown in Figure 2. Analysis of Figure 2 indicates that the equipment effectively captures and preprocesses hyperspectral facial images. Regardless of whether the shooting conditions are bright or dim, the equipment can process the acquired hyperspectral images into visually comfortable images, providing reliable data support for hyperspectral facial automated recognition tasks.

Figure 2: Hyperspectral Image Acquisition and Preprocessing Results

4.Project Deliverables

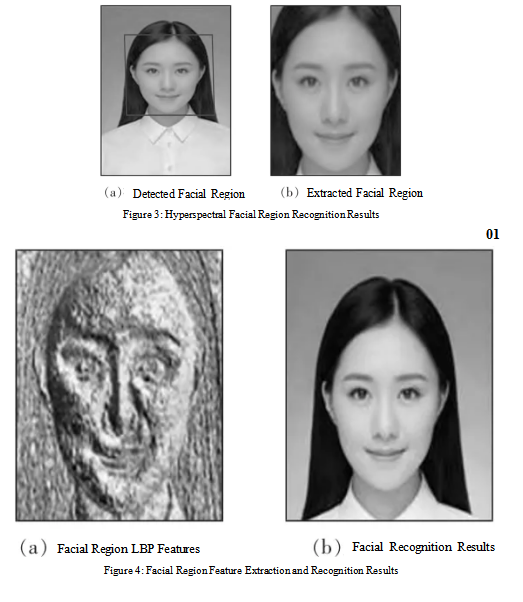

Taking the preprocessed on-site tester image A1 as an example, hyperspectral facial image detection, feature extraction, and recognition operations were performed. The resulting hyperspectral facial image detection, as well as the feature extraction and recognition effects, are shown in Figures 3 and 4. It can be observed that the hyperspectral facial image detection was successfully completed, with the target facial region effectively identified from the images. The LBP features of the target facial region were not only effectively extracted but also yielded good feature extraction results. The convolutional neural network facial recognition model exhibited a fast training speed, converging in approximately 8 seconds, completing the model training, with the loss function value approaching zero.

Search

Categories

Popular Posts

Latest Posts

-100x100.jpg)

Comments: 0

No comments